Powering PANOPTES

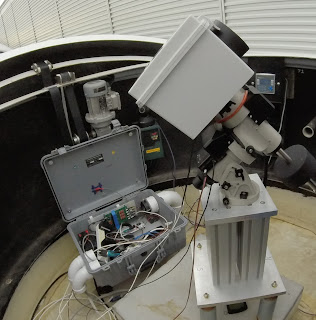

The baseline PANOPTES design used in PAN001 includes three custom circuit boards: two protoboards and a custom PCB. The latter is rather expensive due to the use of 5 solid state relays that cost at least $20 a piece... Plus there is a footprint error in the PCB layout for each of those relays that requires repair by the person soldering the board. For each type of part we want to add to the layout of a PCB, we need to know the exact position of each pin of that part, what that pin does (e.g. a power input or specific signal) and the area covered by the body and leads/pins of the part. This info, called the footprint, is used when going from the schematic to a layout of parts and traces on a board, and helps avoid having two parts positioned such that they collide when you go to populate the board. Luc, an EE at Gemini South in Chile and the designer of the PANOPTES PCBs, has been designing a new PCB, the "Interface Board", two of which will be able to replace the t...